Rafay at Gartner IOCS 2025 : Modern Infrastructure, Delivered as a Platform

As a sponsor of Gartner IOCS 2025, Rafay highlights why modern I&O needs a platform operating model to keep pace with cloud-native and AI workloads.

read More

The Rafay Platform helps instantly monetize GPU infrastructure for cloud providers and speed up AI application delivery for enterprises–while unlocking new revenue streams, improving profitability, and keeping systems secure.

While many GPUs are underutilized, The Rafay Platform stack ensures AI application delivery is faster, more accurate, and more secure than ever–giving companies the competitive edge they need to take hold of evolving GenAI initiatives in the business.

Whether a GPU cloud or sovereign cloud provider, The Rafay Platform supports national data sovereignty, residency, and compliance requirements so teams can worry less about infrastructure, and focus their energy on innovation.

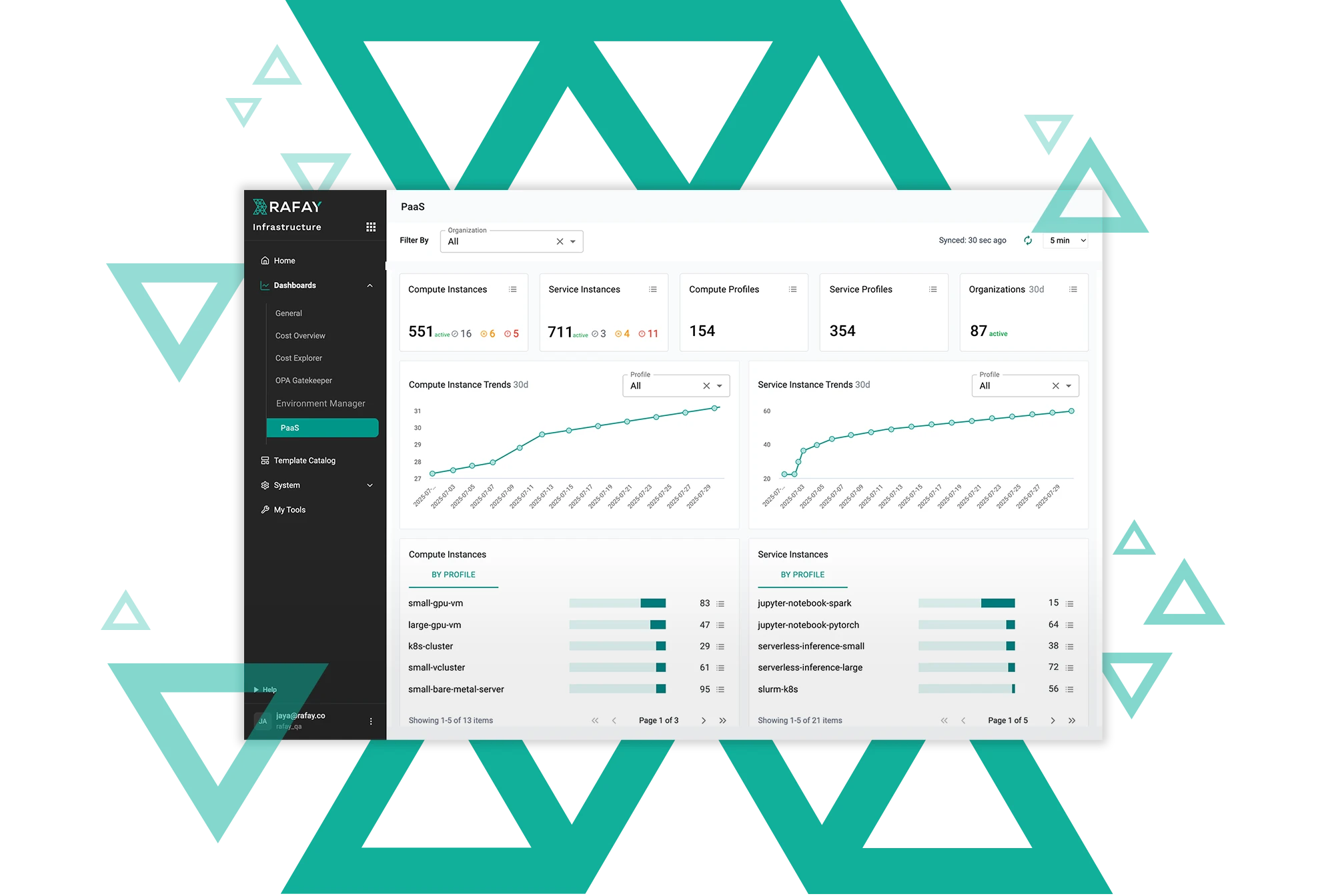

Accelerate your time-to-market with high-value NVIDIA hardware by rapidly launching a PaaS for GPU consumption, complete with a customizable storefront experience for your internal and external customers.

Transform the way you build, deploy, and scale machine learning with Rafay’s comprehensive MLOps platform that runs in your data center and any public cloud.

Data scientists can quickly access a fully functional data science environment without the need for local setup or maintenance. They can be more productive, sooner, by focusing on coding and analysis rather than managing AI infrastructure

Help developers experiment with GenAI by enabling them to rapidly train, tune, and test large models, along with approved tools such as vector databases, inference servers, etc.

The Rafay Platform stack helps platform teams manage AI initiatives acrossany environment–helping companies realize the following benefits:

Complex processes and steep learning curves shouldn’t prevent developers and data scientists from building AI applications. A turnkey MLOps toolset with support for both traditional and GenAI (aka LLM-based) models allows them to be more productive without worrying about infrastructure details

By utilizing GPU resources more efficiently with capabilities such as GPU matchmaking, virtualization and time-slicing, enterprises reduce the overall infrastructure cost of AI development, testing and serving in production.

Provide data scientists and developers with a unified, consistent interface for all of the MLops and LLMOps work regardless of the underlying infrastructure, simplifying training, development, and operational processes.

See for yourself how to turn static compute into self-service engines. Deploy AI and cloud-native applications faster, reduce security & operational risk, and control the total cost of Kubernetes operations by trying the Rafay Platform!